- Understanding AI Visibility: The New Search Frontier

- Essential Metrics for Measuring Visibility in AI Search Engines

- Top Tools for Tracking Visibility in AI Search

- Implementing Your AI Visibility Tracking Framework

- Interpreting Data and Optimizing for Better AI Visibility

- Future-Proofing Your AI Visibility Strategy

- Conclusion: Building a Sustainable AI Visibility Framework

- FAQ: AI Visibility Tracking

Your website ranks #1 on Google for target keywords. Traffic is solid. Then you discover ChatGPT, Gemini, and Perplexity never mention your brand when answering questions you’ve dominated in traditional search for years. Welcome to the invisible gap.

Understanding AI Visibility: The New Search Frontier

The search landscape shifted when AI-powered platforms started answering queries directly. ChatGPT processes over 100 million weekly active users. Google AI Overviews dominate 84% of search results. Perplexity, Claude, and Gemini handle millions of daily queries through answer engines that bypass traditional Google Search entirely.

AI search optimization fundamentally differs from traditional SEO. Understanding AI snippets ranking and AI search ranking factors matters because brand visibility in AI depends on different signals than conventional organic rankings. When users query these platforms, AI-powered SERP features and AI Mode responses synthesize information without clear attribution — your brand might appear or vanish completely, and traditional analytics won’t capture it.

Generative AI search increasingly handles research-oriented queries, detailed comparisons, and complex how-to questions that drive qualified leads. Being invisible in these platforms means losing mindshare when prospects form opinions about solutions — a visibility gap that translates directly to lost opportunities and weakened competitive positioning.

Key Takeaways:

- AI platforms handle billions of queries without generating traditional website visits

- Traditional SEO rankings don’t predict AI-generated response visibility

- New measurement frameworks capture brand presence in conversational answers

- Enterprise brands risk losing high-intent prospects during research phases

Why Traditional SEO Metrics Fall Short in AI Search

A site ranking #1 in Google Search can be absent from ChatGPT’s answer to identical queries. Traditional ranking metrics, CTR, and position tracking become meaningless when AI platforms synthesize information from dozens of sources without clear attribution.

Google Analytics can’t track brand mentions in AI conversations. Search Console doesn’t capture AI snippet ranking. Keyword ranking position tools don’t monitor how answer engines perceive brand authority. Zero-click AI results where users get complete answers represent valuable visibility invisible to conventional SERP tracking. Your brand could be cited across multiple platforms, driving branded traffic increases and improving conversions, yet traditional metrics show nothing.

| Category | Traditional SEO Metrics | Traditional SEO Metrics AI Visibility Metrics (New) | Key Difference / Why It Matters |

|---|---|---|---|

| Visibility / Ranking | SERP Rankings (position in Google’s organic results) | AI Answer Inclusion Rate (frequency content appears in AI answers or summaries) | AI models often summarize or cite only a few sources — ranking ≠ visibility. |

| Traffic Measurement | Organic Click-Through Rate (CTR) | AI Referral Share (traffic coming directly from AI search/chat tools) | AI summaries reduce direct clicks — need new ways to measure referral value. |

| Impressions | Search Impressions (Google Search Console data) | AI Mentions / Citations (number of times brand or site is referenced by AI models) | Visibility now includes being cited by AI, not just appearing in search. |

| Engagement | Bounce Rate, Time on Page | User Engagement Post-AI (how users interact after landing from AI-generated links) | AI answers may bring more qualified, context-aware visitors. |

| Keyword Focus | Keyword Rankings, Search Volume | Query Intent Clustering (AI-driven query understanding and topic coverage) | AI queries are more conversational — intent-based optimization becomes key. |

| Content Optimization | On-Page SEO (metadata, headings, keywords) | Semantic Coverage & Entity Presence (how well content aligns with AI knowledge graphs) | AI visibility depends on deep topic coverage and structured knowledge. |

| Backlinks | Number and Quality of Inbound Links | Model Citation Authority (how often AI models select or trust your content) | Authority now includes model trust, not just web links. |

| Technical SEO | Site Speed, Crawlability, Mobile Usability | Data Accessibility (schema markup, structured data readability for AI) | AI systems rely more on structured data for understanding and citation. |

| Brand Awareness | Branded Search Volume | AI Brand Recall (how often brand appears or is recalled in AI outputs) | Visibility in AI responses builds brand trust beyond traditional search. |

| Conversion Metrics | Goal Conversions, Revenue from Organic | AI Conversion Influence (conversions initiated after AI-assisted queries) | Attribution models must include AI-assisted discovery paths. |

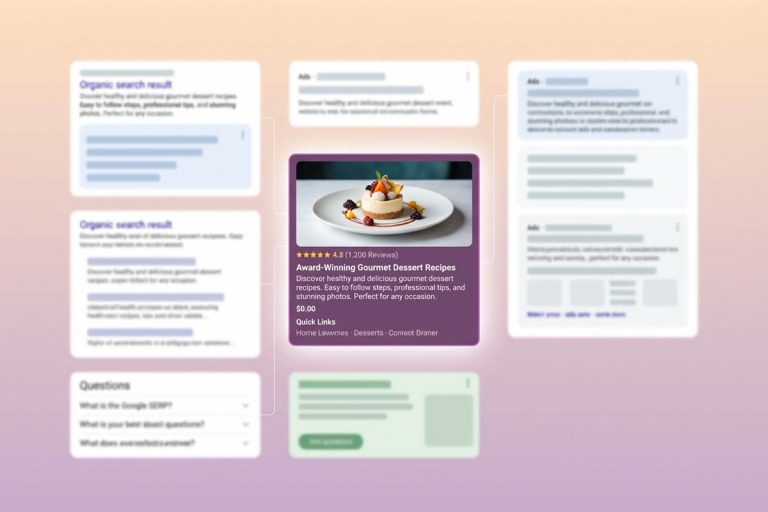

The Hybrid Search Behavior: How Users Navigate Between Traditional and AI Search

AI search behavior reveals distinct patterns based on search intent and device usage. Quick factual queries still go to Google search, particularly on mobile search where speed matters. Complex questions requiring detailed explanations increasingly go to ChatGPT, Claude, or Perplexity, especially from desktop search where users have time for deeper research. Voice search queries on smart speakers now route directly to AI platforms using full-sentence prompts rather than keyword fragments.

User search habits are evolving rapidly. Research queries, product comparisons, and how-to questions migrate toward conversational interfaces where conversational search SEO and voice search AI optimization become critical. Query types matter — transactional searches remain in traditional engines while informational and investigational searches shift to AI platforms. Understanding this user behavior split is essential for a comprehensive visibility strategy.

This creates dual visibility requirements. Users often start with AI for research, then move to traditional search engines for specific vendors. A brand invisible during AI research loses authority positioning, making conversion harder. The customer journey spans both ecosystems — ignore either at your peril.

Essential Metrics for Measuring Visibility in AI Search Engines

Measuring visibility in AI platforms requires different metrics than traditional SEO focused on rankings and traffic. AI visibility metrics focus on brand mentions, citations, context quality, and competitive positioning — similar to how featured snippets AI and knowledge graph AI influence traditional search, but with distinct measurement approaches.

Brand mention frequency tracks how often your brand appears when AI platforms answer relevant queries through AI-driven content discovery. Citation rate measures when platforms actively link to your content as authoritative sources versus passive mentions. Share of voice compares your visibility against competitors — appearing in 30% of category queries while competitors hit 60% means losing mindshare regardless of traditional performance.

Context quality evaluates whether mentions are positive recommendations, neutral listings, or negative cautions — essentially sentiment analysis for AI responses. Prompt coverage identifies which query types trigger your brand, revealing content gaps and authority deficits. Building topical authority across relevant subject areas increases citation frequency and mention quality.

| Metric | What It Measures | Why It Matters |

|---|---|---|

| Brand Mention Frequency | Appearance rate in AI responses | Baseline visibility indicator |

| Citation Rate | Percentage with content links | Authority signal and traffic driver |

| Share of Voice | Visibility vs competitors | Competitive positioning |

| Context Quality | Sentiment of mentions | Determines actual value |

| Prompt Coverage | Query types triggering brand | Identifies content gaps |

Brand Mentions vs. Citations: Understanding the Difference

A mention occurs when your brand name appears: “Companies like Salesforce, HubSpot, and Monday.com offer CRM solutions.” An AI citation happens when AI platforms link to your content as the source: “According to HubSpot’s report, 73% of sales teams use AI tools.”

Citations carry exponentially more weight, signaling that platforms view your content as authoritative and trustworthy. Citations drive actual traffic while building authority signals. Mentions create awareness; citations establish thought leadership. Being mentioned puts you in consideration; being cited positions you as the definitive expert.

Share of Voice and Competitive Positioning in AI Platforms

AI share of voice measures what percentage of relevant prompts include your brand versus competitors — a critical metric for understanding competitive visibility across platforms. A 30% SOV means appearing in 3 out of 10 category queries. This reveals competitive dynamics of traditional SEO masks, particularly in search generative experience where brand perception forms before users ever visit websites.

Sentiment analysis and sentiment tracking add crucial context — are mentions of positive sentiment recommendations, neutral sentiment listings, or negative sentiment warnings? This sentiment analysis reveals not just visibility in AI platforms but the quality of that visibility. Competitive analysis through benchmarking matters because brands consistently appearing alongside market leaders benefit from association, while those omitted lose market share and mindshare despite strong traditional performance.

Top Tools for Tracking Visibility in AI Search

The AI visibility tracking landscape offers solutions from enterprise platforms to starter tools. Choosing depends on organizational needs, budget, and technical requirements. Key differentiators: platform coverage (which engines are monitored — ChatGPT, Gemini, Claude, Perplexity, Google AI Overviews), prompt customization, historical tracking, competitive comparison, and integration capabilities.

| Tool | Best For | Platform Coverage | Starting Price | Key Strength |

|---|---|---|---|---|

| seoClarity ArcAI | Enterprise | Comprehensive | N/A | Deep integration |

| Semrush AI Toolkit | Growing businesses | Major platforms | $99/month | Unified tracking |

| Peec AI | LLM monitoring | ChatGPT, Claude | €89/month | Advanced testing |

| ZipTie | Budget startups | Major platforms | $69/month | Affordable baseline |

| Profound | Technical teams | Multi-model | N/A | Engineering-level |

At LeadCraft, AI visibility tracking has become a cornerstone of comprehensive digital marketing solutions implemented across enterprise clients. The technical SEO team integrates these platforms with existing analytics stacks, ensuring seamless data flow and actionable reporting. As a leading agency specializing in measurable growth, LeadCraft builds complete AI visibility frameworks tailored to industry dynamics, competitive landscapes, and business KPIs. This systematic approach transforms raw tracking data into strategic insights impacting bottom-line results.

Enterprise-Level Solutions for Complex Organizations

Enterprise platforms handle organizational complexity mid-tier tools can’t address. SeoClarity ArcAI provides comprehensive enterprise AI tracking integrated with their enterprise platform and enterprise-grade AI tools, offering deep historical data, custom dashboards, and multi-user collaboration. Profound specializes in LLM monitoring with advanced prompt engineering and API access for custom integrations.

These cost $500-2000+ monthly but justify investment for large organizations through unlimited team collaboration seats, API connectivity, white-label reporting, dedicated support, and advanced segmentation. ROI calculation is straightforward — understanding visibility gaps can identify millions of opportunities traditional metrics miss.

Budget-Friendly Options for Growing Businesses

Affordable AI tracking solutions provide essential metrics without enterprise investment, making SMB AI visibility tools accessible for organizations prioritizing ROI and value metrics. Cost-effective AI monitoring starts with platforms designed specifically for small business needs and budget constraints.

ZipTie offers basic mention tracking across major platforms starting $50-100 monthly, covering both traditional AI-powered SERP features and direct platform responses. LLMrefs provides citation monitoring with limited prompts at similar pricing.

Trade-offs are clear: fewer platforms (2-4 major ones), limited historical data (30-90 days), restricted prompts (10-50 queries), and no advanced features like sentiment analysis. However, they’re sufficient for baseline visibility and identifying gaps. Supplement with manual testing: regularly querying ChatGPT, Gemini, Perplexity, and Claude with relevant prompts provides directional insights at zero cost.

Cross-Platform Coverage Considerations

Cross-platform tracking provides the complete picture that single-platform monitoring can’t deliver. Effective AI platform coverage requires understanding how different search engines operate.

ChatGPT draws from training data with distinct citation preferences, making ChatGPT in search results particularly valuable for brand mentions. Gemini leverages Google’s Knowledge graphs with different authority signals. Claude emphasizes nuanced responses with unique sourcing patterns. Perplexity focuses on academic sources with real-time web access.

Google AI overviews pull from results but apply different factors than organic listings. Microsoft Copilot integrates enterprise contexts, while AI mode in various platforms adjusts response styles. Specialized vertical AIs for healthcare and legal industries require targeted tracking strategies.

Multi-engine monitoring reveals visibility gaps that single-platform tracking misses. Platform prioritization should align with audience behavior. B2B technology companies should prioritize ChatGPT and Claude (popular with professionals).

Consumer brands need a strong Google AI overview presence. Comprehensive AI tracking across all platforms delivers complete visibility, but resource constraints may require focusing on 2-3 primary platforms where target audiences conduct research.

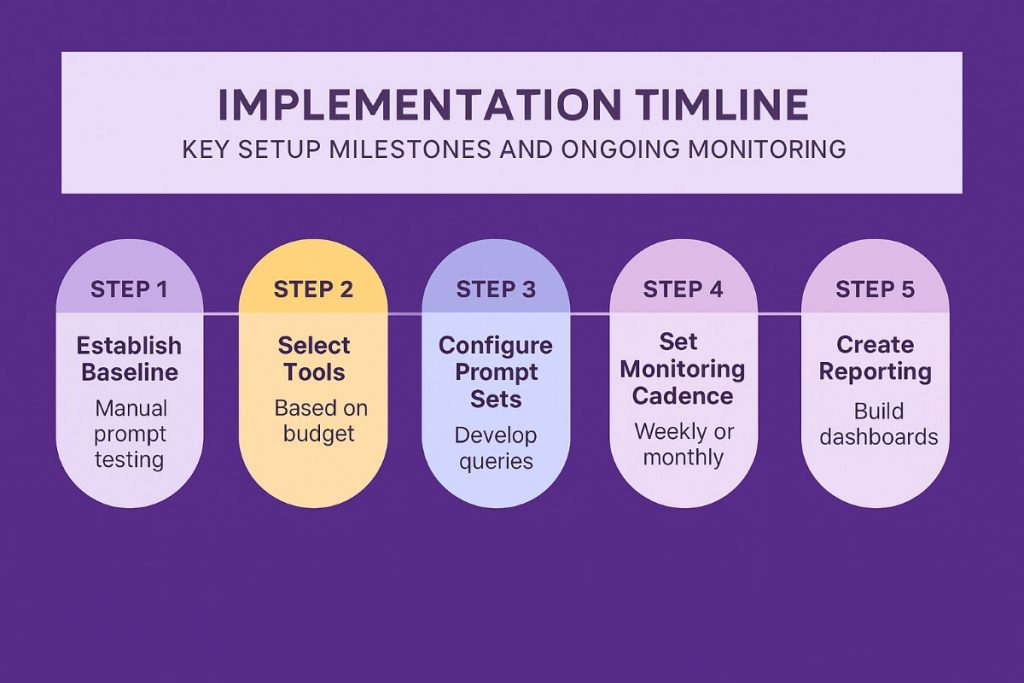

Implementing Your AI Visibility Tracking Framework

Establishing advanced AI monitoring requires systematic implementation aligned with business objectives.

Step 1: Establish Baseline — Manually test 20-30 core prompts across 3-4 platforms. Document which mentions your brand, what context they provide, whether they cite content, and competitor comparisons.

Step 2: Select Tools — Choose platforms based on budget, coverage priorities, and features. Enterprise needs comprehensive solutions with API access. Growing businesses might start with mid-tier tools.

Step 3: Configure Prompt Sets — Develop 30-50 high-priority queries covering branded searches, product categories, pain points, and comparisons. Start focused — expand after establishing reliable tracking.

Step 4: Set Monitoring Cadence — Establish schedules: weekly during active campaigns, monthly for maintenance, quarterly for strategic planning. Consistency matters more than frequency.

Step 5: Create Reporting — Build dashboards combining AI visibility with traditional analytics. Track correlations between mention increases and branded search lifts, lead quality improvements, or sales cycle changes.

Defining Tracking Goals and Success Metrics

Effective goal-setting connects metrics to business outcomes. Avoid vanity metrics. Instead, establish objectives leading to revenue:

Goal 1: Achieve presence in 60% of category-defining queries within 6 months.

Goal 2: Increase citation rate from 15% to 35% of mentions.

Goal 3: Grow SOV versus top competitors from 20% to 40%.

Goal 4: Appear in the top 3 mentioned brands for priority categories.

Realistic benchmarks help — most industries show 10-30% presence initially, with leaders achieving 40-60%. Connect goals to downstream business metrics: increased branded search, higher-quality leads, improved sales efficiency.

Creating Effective Prompt Sets for Comprehensive Testing

Building strategic prompts requires thinking beyond keywords to how users actually query AI platforms. Structure across five categories:

Branded Queries: “What is [Brand]?”, “Tell me about [Brand]’s approach to [topic]”.

Product Category Queries: “Best [product] for [use case]”.

Problem/Solution Queries: “How to solve [pain point]?”.

Comparison Queries: “Compare [Brand] vs [Competitor]”.

Buying Intent: “Should I buy [product]?”.

Use conversational, natural phrasing matching actual AI search behavior — full sentences, not keyword strings. Test variations — different phrasings yield different results, revealing optimization opportunities.

Interpreting Data and Optimizing for Better AI Visibility

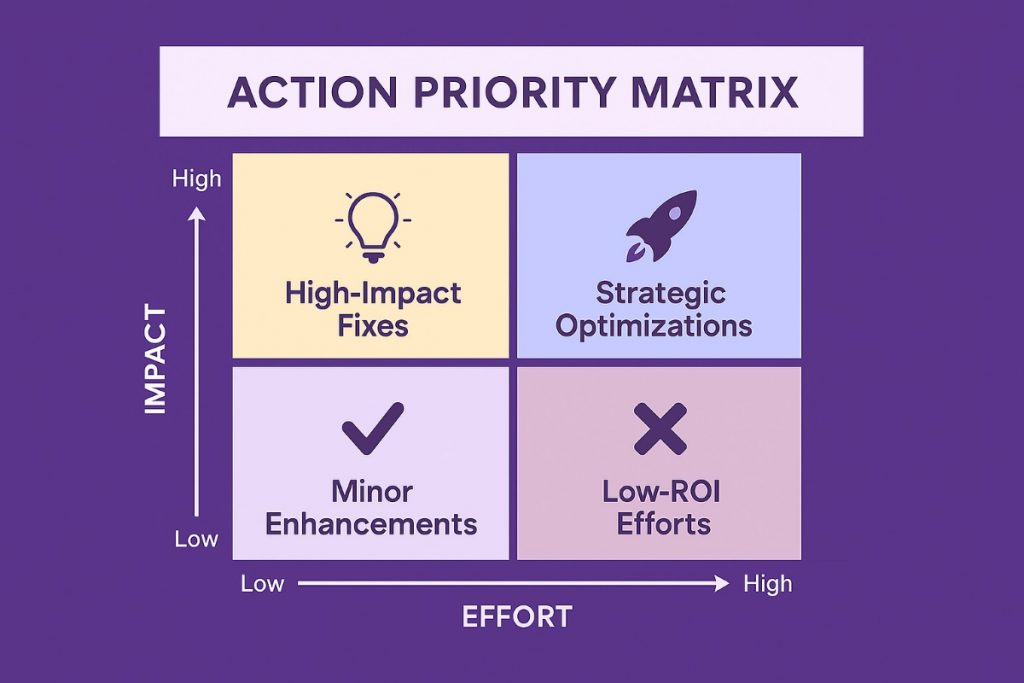

Raw tracking data matters when translated into optimization decisions. Here’s the analytical framework:

Identify visibility gaps by comparing mention rates across query categories. Complete absence signals content gaps or authority deficits needing immediate attention.

Analyze context mentions beyond presence. Are you recommended as a solution or merely listed? Context quality determines whether visibility drives value.

Study citation sources to understand what makes content citation-worthy. Which pages get cited most? What characteristics — comprehensive depth, original data, clear structure, authoritative credentials?

Examine competitive patterns to identify dynamics. Which brands dominate query types and why? This intelligence reveals where to compete versus differentiate.

Track temporal trends to measure improvement. Is visibility increasing, stable, or declining? Sudden drops might indicate content optimization issues or competitive displacement.

Connect insights to priorities using impact analysis. High-impact opportunities (complete absence in important queries) deserve immediate attention. Medium-impact improvements (low-quality mentions) warrant systematic optimization. Low-impact refinements get attention after high-impact work completes.

Identifying Content Gaps and Optimization Opportunities

Systematic gap analysis spots highest-leverage opportunities. Map tracked prompts to existing content — which queries have no relevant content? These represent pure opportunity.

Analyze competitor patterns: where do they appear while you’re missing? What topics do they cover comprehensively? This intelligence reveals what platforms value for citations. Examine existing content generating mentions but not citations — these need enhancement through added data, improved structure, increased depth, or stronger expertise signals. Converting mentions to citations dramatically improves ROI on existing assets.

Identify topical authority gaps — broad areas lacking depth. Platforms favor brands demonstrating comprehensive expertise across related topics. Building content clusters thoroughly addressing subjects from multiple angles establishes authority earning consistent citations.

Measuring ROI and Business Impact

Connecting visibility improvements to tangible outcomes requires understanding impact is often indirect. Measurement relies on proxy metrics and correlation analysis.

Direct Traffic Attribution — Monitor branded search increases following visibility improvements. Users discover brands via platforms, then search directly.

Lead Quality Analysis — Track whether leads mentioning AI discovery show different conversion rates, deal sizes, or cycle lengths.

Sales Cycle Velocity — Measure whether prospects familiar with AI require less education, move faster through the pipeline, or show higher win rates.

Competitive Displacement — Monitor whether improved SOV correlates with market share gains or competitive win rates.

Attribution remains challenging because interactions don’t generate traditional tracking. Implement systematic lead source tracking through form questions and CRM fields. Correlate visibility improvements with qualitative signals and downstream performance metrics to build ROI cases.

Future-Proofing Your AI Visibility Strategy

The landscape continues evolving rapidly. Staying ahead requires understanding emerging trends while building foundational principles.

Multimodal AI Processing — Future platforms process images, video, and text simultaneously. Visual content optimization becomes critical.

Personalized AI Agents — Platforms increasingly learn preferences, making visibility more context-dependent.

Specialized Vertical AIs — Industry-specific platforms emerge for legal, medical, financial domains.

Voice-Based AI Search — Smart speakers prioritize conversational content.

Focus on foundational principles over platform-specific tactics. Building genuine topical authority, creating comprehensive content, establishing clear information architecture, and developing strong entity relationships remain valuable regardless of platform evolution. Organizations nailing fundamentals adapt faster than those chasing temporary tactics.

Implement adaptive practices: monthly reviews of platform adoption trends, quarterly reassessment of tracking priorities, and continuous content optimization based on citation analysis. AI visibility isn’t set-it-and-forget-it — it’s an ongoing strategic priority requiring consistent attention.

Organizing Content for Maximum AI Discovery

Content Architecture fundamentally impacts visibility beyond traditional optimization. Structure using these principles:

Clear Entity Relationships — Explicitly connect your brand to relevant topics using structured markup and natural language. Help platforms understand what you’re authoritative about.

Comprehensive Topic Coverage — Develop content clusters thoroughly addressing subjects from multiple angles: overviews, deep-dives, how-tos, case studies, research.

Strong Information Hierarchy — Organize with clear heading structures, logical flow, and scannable formatting AI easily parses. Use descriptive headings, consistent formatting, digestible chunks.

Authoritative Signals — Include expertise elements: author credentials, cited sources, original data, real examples demonstrating application.

The key difference from traditional SEO: optimize for topical authority and clear architecture. Help platforms understand what you’re the definitive expert on and when to cite you.

Conclusion: Building a Sustainable AI Visibility Framework

AI visibility tracking represents a fundamental shift in measuring search presence. Success requires a strategic framework connecting metrics to business outcomes while building sustainable advantages.

It is an ongoing strategic priority requiring consistent attention like traditional SEO. Organizations establishing strong visibility now benefit from compounding authority increasingly difficult for competitors to displace.

Start with practical first steps: conduct baseline testing this week using 20-30 prompts, select tracking tools within the month, establish systematic monitoring within the quarter. The visibility gap represents both risk and opportunity — waiting means losing mindshare while competitors establish authority.

FAQ: AI Visibility Tracking

What is AI search visibility and why does it matter for my business?

AI search visibility measures how often your brand appears in responses from ChatGPT, Gemini, Claude, Perplexity, and Google AI Overviews. It matters because billions of users turn to AI platforms for research and recommendations without clicking traditional websites. If your brand is absent from AI-generated answers, you’re invisible during critical decision-making phases regardless of traditional rankings.

How is tracking visibility in AI search different from traditional SEO tracking?

Traditional tracking focuses on keyword rankings, SERP positions, organic traffic, and CTR. AI visibility tracking measures brand mentions, citation rates, share of voice, sentiment, and query types triggering your brand. The fundamental difference: traditional tools track website visits; AI visibility captures brand presence in conversational answers that don’t generate visits but significantly influence purchasing.

What are the best tools for measuring visibility in AI search platforms?

The tool depends on budget and needs. Enterprise organizations benefit from seoClarity ArcAI or Profound offering deep analytics and API access. Growing businesses get value from Semrush’s AI Toolkit or Peec AI. Budget-conscious startups start with ZipTie or Am I On AI for baseline visibility.

What metrics are most important when tracking AI search visibility?

Five critical metrics: Brand Mention Frequency (how often you appear), Citation Rate (percentage linking to your content), Share of Voice (visibility versus competitors), Context Quality (positive/neutral/negative mentions), and Prompt Coverage (query types triggering brand). Citation rate typically matters most because citations drive both traffic and authority.

How do I get started with AI visibility tracking for my brand?

Start with manual baseline testing: identify 20-30 important queries, test across ChatGPT, Google AI Overviews, and Perplexity, document where your brand appears, note competitor comparisons, identify gaps. This takes 2-4 hours but provides essential context before investing in tracking tools. Once you understand the baseline, select the appropriate tool and implement systematic monitoring aligned with business goals.

585

585  20 min

20 min

Share

Share

X

X

LinkedIn

LinkedIn