- Understanding the AI Citation Landscape

- Technical Foundation: Make Your Content Accessible to AI

- Benchmark Your Current AI Visibility

- What Makes Content Citation-Worthy

- Build Citation-Ready Content Architecture

- 9 Proven Tactics to Increase AI Citations (GEO Research)

- Optimize Content Structure for LLM Parsing

- Leverage Third-Party Citations

- Measure and Improve Your Citation Performance

- Real-World Examples: Brands Winning AI Citations

- Schema Markup and Structured Data

- Common Mistakes That Block AI Citations

- Build for Citation, Not Just Ranking

- FAQ

AI-powered search is rewriting the rules of visibility. ChatGPT, Perplexity, Claude, and Google’s AI Overview now generate 7.3 billion visits monthly—and if your content isn’t structured for these platforms, you’re invisible to a massive segment of potential customers.

Getting cited by AI search engines requires three core elements: structured content that LLMs can easily parse, authoritative signals like statistics and expert quotes, and technical infrastructure (robots.txt, llms.txt, schema markup) that grants crawler access. AI models prioritize content with high parsing fidelity, clear entity naming, and data-rich answers positioned at the beginning of pages.

Here’s the challenge: ranking #1 on Google doesn’t guarantee AI citations. Reddit threads and Wikipedia entries frequently appear in ChatGPT responses, despite not always topping traditional search results. Why? Because LLMs evaluate content differently than search engines—they favor intrinsic quality over historical link equity.

This guide breaks down exactly how to engineer content that AI platforms trust, cite, and surface to users making purchase decisions.

Understanding the AI Citation Landscape

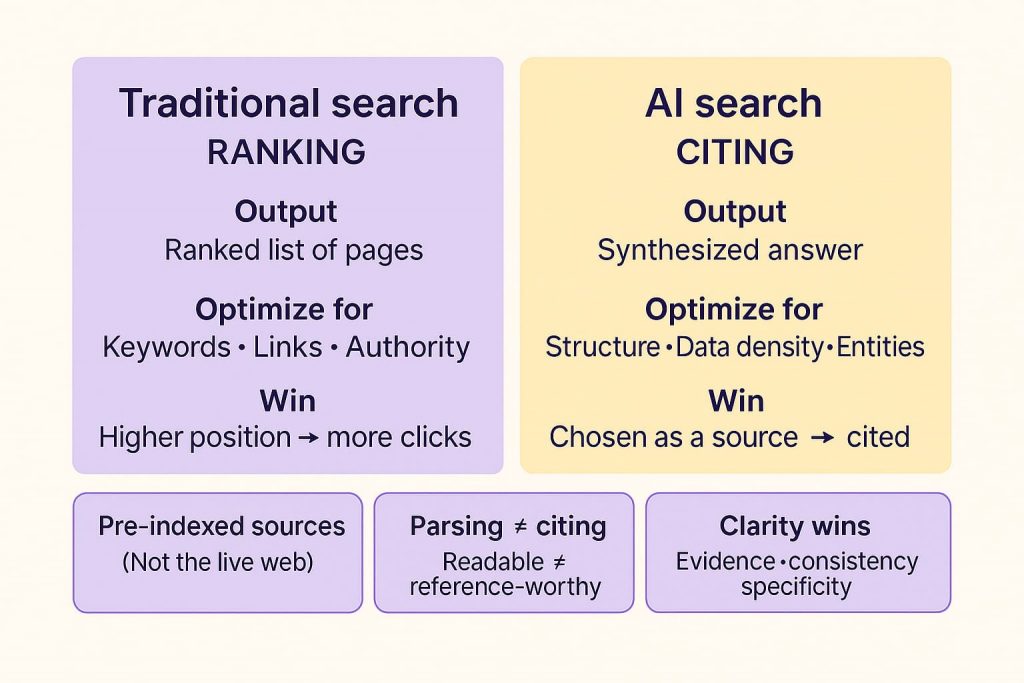

AI search differs fundamentally from traditional search engines. When you type a query into Google, you get a ranked list of pages. When you ask ChatGPT or Perplexity, you get a synthesized answer with attributed sources. This shift—from ranking to citing—changes everything about content strategy.

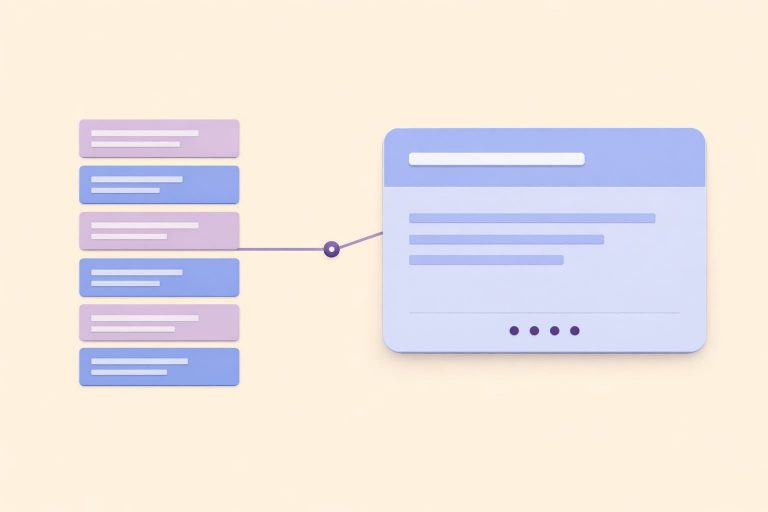

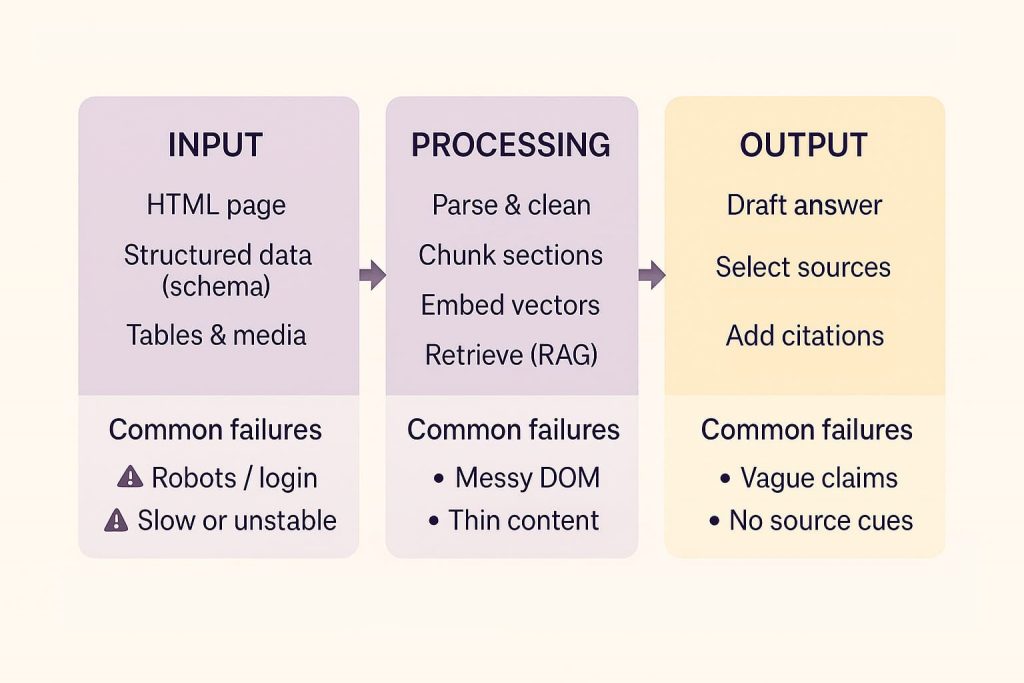

Large Language Models use Retrieval-Augmented Generation (RAG) to select sources. They don’t crawl the web in real-time. Instead, they reference pre-indexed content from search engine databases. ChatGPT pulls from Bing’s index, Claude uses Brave Search, and Gemini relies on Google. This means your content needs to be indexed by these platforms before AI models can even consider citing it.

Generative Engine Optimization (GEO) has emerged as the discipline for this new reality. Unlike traditional SEO—which focuses on keywords, backlinks, and domain authority—GEO prioritizes structure, data density, and entity depth. High Google rankings don’t guarantee AI citations because LLMs prioritize intrinsic content quality over historical link equity.

How LLMs Select Content to Cite

There’s a critical distinction between parsing and citing. Parsing means an LLM can read your content. Citing means it chooses to surface it in responses. Just because AI can access your page doesn’t mean it will reference it.

Structured Data enables Parsing Fidelity, which increases Citation Likelihood. According to OpenAI documentation, LLMs favor frequently-linked, highly-structured, semantically-consistent content. They extract information more reliably from pages with clear hierarchy, consistent entity naming, and explicit relationships between concepts.

Think of it this way: if your content requires significant interpretation to understand, LLMs won’t cite it—even if humans find it valuable. Citation-worthiness requires both technical accessibility and content quality that machines can verify.

The Difference Between SEO Ranking and AI Citation

Traditional SEO optimizes for keyword placement, backlink profiles, and domain authority. GEO extends these principles but focuses on different attributes: content structure, data density, and entity relationships.

Consider this: a perfectly optimized blog post might rank #3 on Google, but never appear in ChatGPT responses. Meanwhile, a technical documentation page from Stripe—structured as modular content objects with semantic markup—gets cited consistently despite not always ranking at the top.

The gap comes down to how each system evaluates authority. Google uses external signals (links, domain age, user behavior). LLMs use intrinsic signals (structure, data specificity, source attribution). You need both to win visibility in 2025’s search landscape.

Technical Foundation: Make Your Content Accessible to AI

Before focusing on content quality, you need proper technical infrastructure. Without it, your pages won’t reach LLM training or retrieval layers—no matter how brilliant your writing.

Three critical files control AI access:

- robots.txt grants or blocks crawler access from specific bots. Located at /robots.txt, it’s read by GPTBot (OpenAI), PerplexityBot (Perplexity), and ClaudeBot (Anthropic). If you’re blocking these crawlers, you’re blocking AI citations entirely.

- sitemap.xml lists all indexable pages on your site. Search engines use it to understand your site structure and prioritize crawling. Keep it updated and submit it through Google Search Console and Bing Webmaster Tools.

- llms.txt is an emerging standard specifically for AI platforms. It’s a Markdown-formatted file located at /llms.txt that curates priority content for LLMs. While not yet universally adopted, forward-thinking brands are implementing it to guide AI systems toward their most authoritative pages.

Here’s what each file does:

| File | Location | Who Reads It | Why It Matters |

|---|---|---|---|

| robots.txt | /robots.txt | GPTBot, PerplexityBot, ClaudeBot, search crawlers | Controls which bots can access your content |

| sitemap.xml | /sitemap.xml | Search engines (Google, Bing) | Lists indexable pages and crawl priorities |

| llms.txt | /llms.txt | LLMs (ChatGPT, Claude, Perplexity) | Curates priority content for AI platforms |

Implementation is straightforward. Verify your robots.txt allows LLM crawlers (check for “User-agent: GPTBot” followed by “Allow: /”). Ensure your sitemap includes all important pages. Consider adding llms.txt with a brief introduction to your brand and links to cornerstone content.

Blocking AI crawlers might seem like a way to protect proprietary content, but it guarantees zero visibility in AI-generated answers. If competitors allow access while you don’t, they’ll capture mindshare in the fastest-growing search channel.

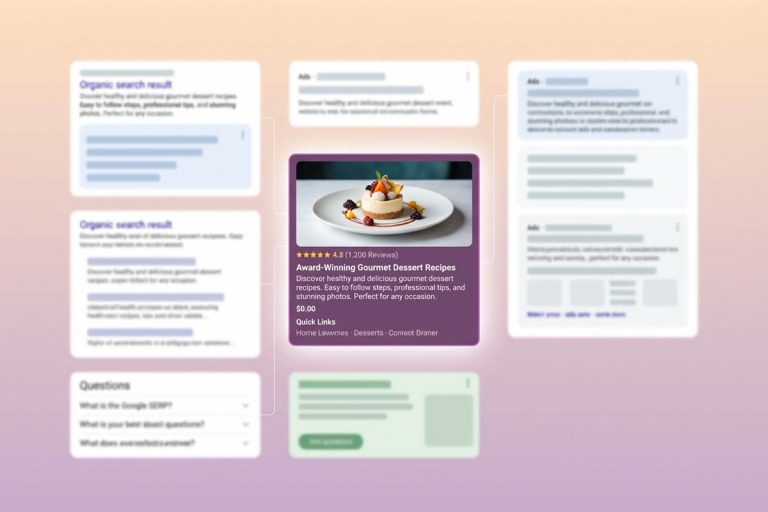

Benchmark Your Current AI Visibility

You can’t improve what you don’t measure. Before optimizing content, establish baseline visibility across AI platforms.

Track three dimensions: by platform (how often you appear in ChatGPT, Perplexity, Claude, and Gemini), by intent cluster (consistency across query variations), and by generation variability (reliability across repeated prompts with the same query).

Citation Score is a predictive metric for LLM surface probability. It combines parsing fidelity (how easily AI extracts information), authority signals (E-E-A-T validation), and structural quality (schema markup, entity consistency). Higher scores correlate with more frequent citations.

What to track:

- Position in multi-source answers: Are you the primary citation or a secondary reference?

- Sentiment: Is your brand represented accurately and positively?

- Context: Which other authorities appear alongside you? (If you’re cited next to Wikipedia and industry leaders, that’s a strong signal.)

Visibility Tracking identifies Content Gaps and guides Optimization Strategy. You might discover pages that should surface but don’t—or high-performing content needing structural upgrades to increase citation frequency.

Tools like VISIBLE™ Platform and Scrunch provide LLM visibility monitoring. They simulate AI queries and track which sources get cited. This data reveals blind spots: topics where you have expertise but lack AI visibility, or pages ranking well in Google but invisible to LLMs.

Finding these gaps early lets you prioritize optimization efforts where they’ll have maximum impact.

What Makes Content Citation-Worthy

Citation-Worthy Content is characterized by five core attributes:

- Structurability (modular, semantically tagged blocks).

- Data-Richness (quantitative metrics, statistics, original research).

- Source-Authority (E-E-A-T signals, domain authority, expert validation).

- Parsability (schema markup, clear entity naming, structured headers).

- Citation-Likelihood (scored 0-100 based on parsing fidelity plus authority signals).

These attributes are preferred by LLMs because they reduce parsing complexity and increase trust. Consider why Wikipedia, research papers, and technical documentation from brands like Stripe Docs and IBM Research excel—they’re engineered for machine comprehension, not just human readers.

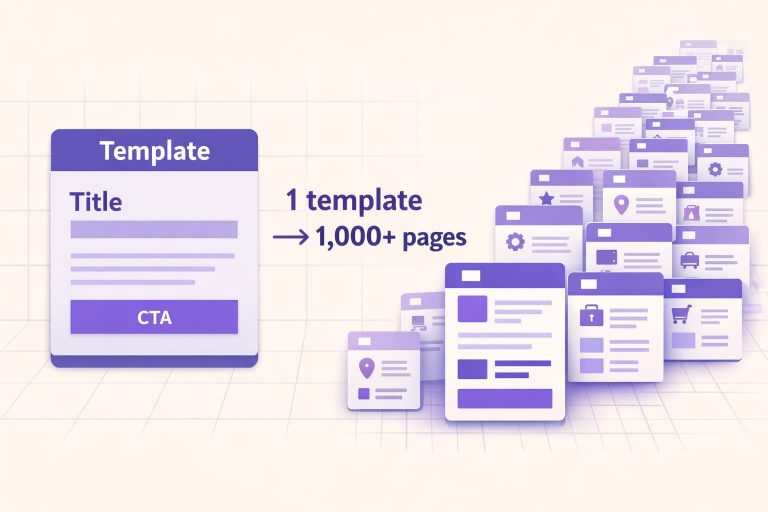

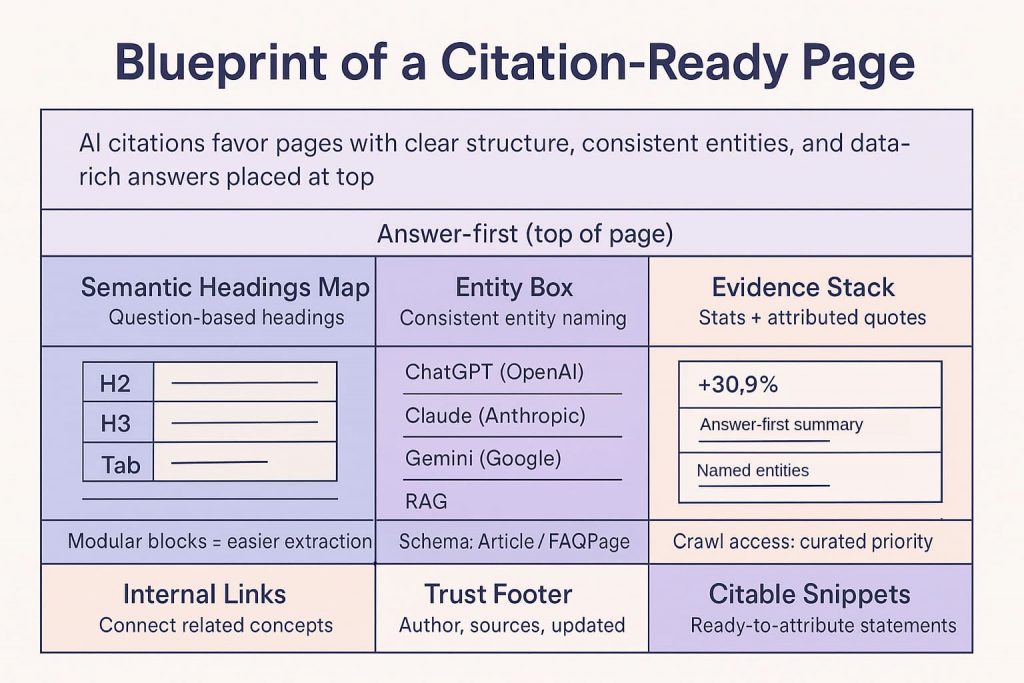

Structured Content Objects are modular content blocks with semantic tagging that enable high parsing fidelity. Instead of writing long-form prose, break information into discrete units: definitions, step-by-step processes, comparison tables, example scenarios. Each unit should be independently understandable and linkable.

Here’s the pattern: LLMs extract coherent conclusions from well-organized content. Poorly structured pages get parsed but not cited. The difference is whether AI can confidently attribute specific facts to your source—or has to skip you because the information is ambiguous.

E-E-A-T (Experience, Expertise, Authoritativeness, Trust) remains critical. Google’s quality guidelines matter to AI platforms because many LLMs use Google’s index as a data source. If your content demonstrates first-party experience, expert credentials, authoritative citations, and consistent entity references, both traditional search and AI platforms will favor it.

Build Citation-Ready Content Architecture

Implementing Structured Content Objects requires systematic entity management. Start with entity consistency: use full, uniform names throughout all content. If you’re writing about “Google Search Generative Experience,” don’t alternate between “Google SGE,” “Search Generative Experience,” and “SGE.” Clear Entity Naming improves Parsing Accuracy by 8%.

Three implementation layers ensure content structure supports AI citations:

- Content structure: Break content into 3-5 sentence paragraphs. Use semantic subheadings—aim for 8+ per article. Employ Q&A formatting for natural queries. Lead with direct answers in the first 150 words, then expand with supporting detail.

- Entity implementation: Link entities to authoritative sources (official product pages, verified LinkedIn profiles, company about pages). Maintain consistent entity references across all content. When mentioning a company, product, or person for the first time, provide full context.

- Schema markup: Deploy Schema.org types like Article, FAQPage, and HowTo to label content structure. Use the sameAs property to link entities with verified profiles. Ensure structured data passes validation in Google’s Rich Results Test.

Look at how leading brands implement this. Stripe Docs structures every API endpoint as a discrete content object with embedded changelogs, code samples, and cross-references. HubSpot Blogs maintain consistent heading hierarchy, optimize meta-descriptions, and add author attribution boxes to every post. IBM Research includes persistent DOIs, comprehensive author metadata, and modular abstract summaries in whitepapers.

These brands build for models, not just users. Their structured design aligns with high parsing fidelity requirements—and LLMs cite them consistently as a result.

9 Proven Tactics to Increase AI Citations (GEO Research)

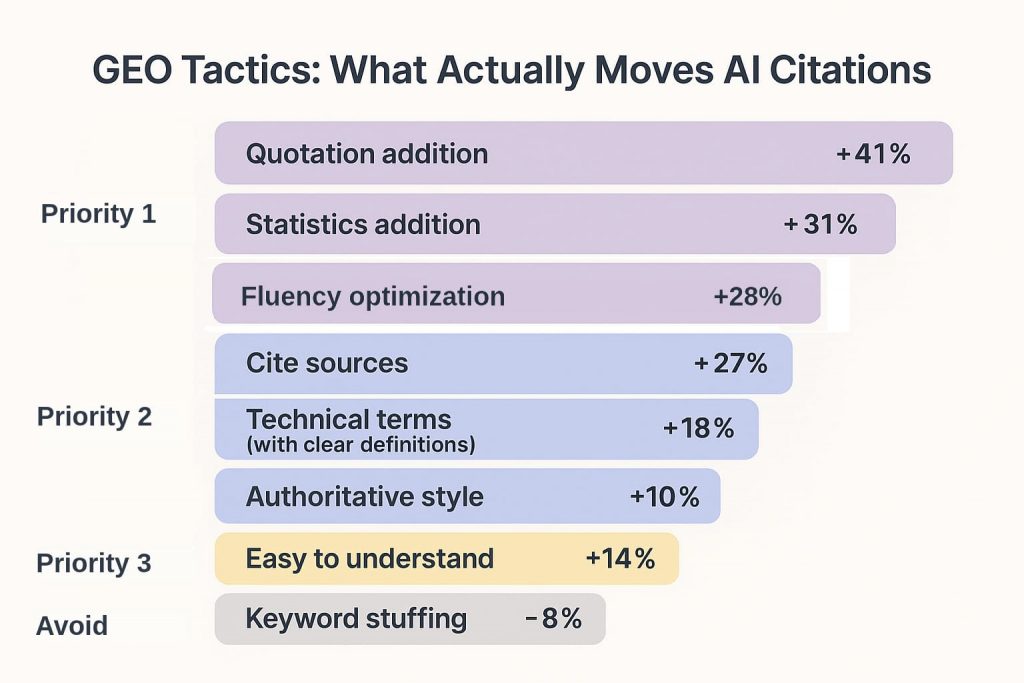

A June 2024 study by researchers from Princeton, Georgia Tech, IIT Delhi, and Adobe Research measured the impact of specific optimization tactics on AI citations. They used two metrics: Position-Adjusted Word Count (how much of your content appears in AI responses) and Subjective Impression (perceived quality and relevance of citations).

Here are the nine tactics, ranked by impact:

Priority 1: High-Impact Tactics (30%+ improvement)

- Quotation Addition: +41.1% position-adjusted word count, +28.1% subjective impression. Add relevant quotes from reliable references. LLMs anchor responses in authoritative snippets. When you include a quote like “According to HubSpot’s 2023 Marketing Report, 35% of executives prioritize conversion metrics,” you’re giving AI a citable data point it can extract and attribute.

- Statistics Addition: +30.9% / +22.7%. Insert numerical facts and proprietary data. Concrete statistics make content unique and citable. LLMs favor specific numbers over vague claims. “Companies using GEO tactics saw a 40% increase in AI citations” beats “many companies saw improvements.”

- Fluency Optimization: +28.0% / +13.5%. Proofread for clarity, fix typos, smooth logical gaps. Well-structured content is easier to extract. If your sentences are convoluted or your logic jumps around, LLMs skip to clearer sources.

Priority 2: Moderate-Impact Tactics (15-28% improvement)

- Cite Sources: +27.3% / +13.5%. Add references with proper attribution: “According to the 2022 CDC report…” This signals credibility and gives LLMs confidence in your data.

- Technical Terms: +17.6% / +10.9%. Leverage niche terminology with clear explanations. Advanced queries favor specialized content. If you’re writing about “parsing fidelity” or “Retrieval-Augmented Generation,” define terms but use precise language.

- Authoritative Style: +10.4% / +19.0%. Speak confidently and back claims with evidence. Credible tone matters when content is relevant and factual. Avoid hedging language like “might” or “possibly” when you have data to support statements.

Priority 3: Lower-Impact Tactics (6-14% improvement)

Easy to Understand: +13.9% / +6.2%. Simplify complex topics. Use shorter sentences and bullet points where appropriate. But don’t sacrifice depth for simplicity—technical audiences expect precision.

Tactics to AVOID

- Unique Words: +6.2% / +5.7%. Adding unusual vocabulary without purpose creates unhelpful fluff. Focus on clarity over creativity.

- Keyword Stuffing: -8.3% / +4.7%. Cramming keywords hurts performance. LLMs detect and penalize low-quality patterns, just like Google does.

- Combining tactics amplifies impact. Fluency + statistics, fluency + quotes, or statistics + quotes yield approximately 5% additional improvement over individual tactics. Smaller or newer sites benefit more from these tactics than established domains—LLMs prioritize intrinsic quality over link equity.

Implementing Authority Signals

E-E-A-T implementation breaks down into four components. Experience signals include first-party data and case studies showing you’ve actually done what you’re describing. Expertise indicators cover author credentials and technical depth—bio boxes, LinkedIn verification, relevant certifications. Authoritativeness markers come from citations by recognized sources (media mentions, industry publications, academic references). Trust factors include consistent entity references and verified information across your digital properties.

Include expert quotes properly attributed. Reference original research from HubSpot reports, IBM Research papers, or OpenAI documentation. Link to authoritative sources consistently.

The difference between fact presentation and opinion matters. Lead with verifiable data, then add expert interpretation. “AI search traffic exceeded 7.3 billion visits in July 2025” (fact) followed by “This shift represents the most significant change in search behavior since mobile-first indexing” (expert interpretation backed by the preceding fact).

Optimize Content Structure for LLM Parsing

Content Structure must precede Citation. Without parsing-friendly formatting, even authoritative content gets overlooked.

- Lead with direct answers: The first two sentences must directly satisfy main intent. “AI visibility measures how often your brand appears in AI-generated answers” beats “Understanding modern search requires examining multiple factors, including traditional rankings and newer metrics such as visibility across various platforms.”

- Paragraph discipline: Maximum 120 words per paragraph. Aim for 3-5 sentences. Break dense information into digestible blocks. Long paragraphs reduce parsing fidelity because LLMs extract better from modular content.

- Formatting hierarchy: Use bullet points for key takeaways, numbered lists for sequential steps, mini-tables for comparison data, and callout boxes for statistics or examples. Each format serves a purpose—don’t overuse any single approach.

- Semantic heading structure: Include 8+ subheadings per article. Use question-based H2s and H3s that mirror natural queries. “How Do LLMs Select Content?” beats “LLM Selection Process” because it matches actual search behavior.

Here’s a transformation example. Instead of: “AI visibility has become critical as LLMs summarize content and brands risk being uncited even when ranking high in traditional search results.”

Write: “AI visibility is now a key metric. LLMs summarize top-ranking pages. Poorly structured pages risk remaining uncited—even with high traditional rankings.”

The second version is easily human-readable and easily AI-extractable. Each sentence delivers a complete thought. The logic flows linearly. There’s no ambiguity about what each statement means.

Leverage Third-Party Citations

LLMs heavily pull from select third-party sources when synthesizing answers: review sites, media publishers, and resource hubs. If a third-party site already ranks highly or gets cited for your target query, earning presence there multiplies your visibility.

Canva’s citation acquisition strategy offers a model. First, identify third-party articles ranking or cited for target queries. Second, pitch inclusion by offering relevant data, quotes, or tools the publisher can link to. Third, make it easy—provide stats, original research, and complementary resources ready to embed.

Third-Party Citations increase Brand Authority, which improves LLM Citation Likelihood. When authoritative sites like HubSpot, TechCrunch, or industry-specific publications mention your brand with proper attribution and links, LLMs recognize these authority signals during retrieval.

Third-party validation carries higher weight than self-published content for newer brands. If you’re building authority from scratch, strategic partnerships with established publishers accelerate AI citation growth faster than creating content on your own domain alone.

This doesn’t mean abandoning owned content—it means creating a dual-channel strategy where authoritative third parties amplify your visibility while your owned properties build long-term equity.

Measure and Improve Your Citation Performance

The Citation Optimization Loop has six phases: Plan → Structure → Publish → Parse → Cite → Learn.

- Plan: Identify entities, schemas, and citation opportunities based on intent research. What questions does your audience ask? Which entities must you cover? What data can you provide that competitors can’t?

- Structure: Build Structured Content Objects with semantic metadata. Deploy schema markup. Ensure every page has clear entity naming and internal linking to related concepts.

- Publish: Use persistent URLs and canonical tags. Ensure indexable formats (HTML, not JavaScript-only rendering). Verify crawler access in robots.txt.

- Parse: Validate parsing via LLM simulation tools. VISIBLE™ Platform simulates how GPTBot and PerplexityBot interpret your content. Preview what AI sees before it goes live.

- Cite: Track surfaced citations across AI outputs. Monitor where content appears in ChatGPT, Perplexity, Claude, and Gemini responses. Note which pages get cited most frequently.

- Learn: Feed results into next iteration. Refine structure, optimize schema, maintain entity naming consistency based on actual inclusion data.

Three key measurement signals matter: Citation Share (frequency of content references), Sentiment (positive/neutral/critical mentions), and Authority Context (which other sources appear alongside yours—being cited next to Wikipedia and industry leaders validates your authority).

Tools streamline this process. VISIBLE™ Platform simulates LLM parsing, provides Citation Score metrics, Structure Graph Analysis, and Entity Depth Index. Scrunch tracks visibility across multiple LLM platforms, with alert systems for changes.

Content optimization is continuous. Even high-performing pages need periodic updates as AI models evolve. Adjust structure, optimize schema, and maintain entity naming consistency based on actual citation data—not assumptions about what might work.

Want a systematic approach to GEO implementation with transparent analytics and measurable business results? Lead Craft’s GEO services provide continuous citation monitoring across all AI platforms, with proven lead growth of 27-40% within 3-8 months.

Real-World Examples: Brands Winning AI Citations

The best way to understand citation-worthy implementation is seeing it in action.

- Stripe Docs: Every API endpoint is a structured content object. Cross-linked changelogs show version history. Persistent URLs ensure citations remain valid. Embedded code samples include semantic callouts explaining each parameter. This modular approach means LLMs can extract exactly what they need without parsing unnecessary context.

- HubSpot Blogs: Consistent heading hierarchy makes content scannable for humans and machines. Optimized meta-descriptions provide clear summaries. Strategic internal linking connects related concepts. Author attribution boxes establish expertise for every post. The result: HubSpot content appears consistently in AI-generated marketing advice.

- IBM Research: Whitepapers include persistent DOIs (Digital Object Identifiers) ensuring permanent citations. Comprehensive author metadata validates expertise. Modular abstract summaries allow LLMs to extract key findings without processing entire documents. Inline references connect to broader research networks.

- The common pattern: these brands engineer content for models, not just humans. Their structured design aligns with high parsing fidelity requirements. This isn’t accidental—it follows principles of structured content engineering, entity consistency, and authority validation.

Smaller brands can replicate these approaches without enterprise budgets. Focus on modular content structure. Maintain clear entity naming. Implement schema markup. The tactics scale regardless of company size.

Schema Markup and Structured Data

Schema Markup provides Semantic Context, which enables LLM Understanding. Schema turns words into structured maps LLMs can follow, making content easier to include, cite, and trust.

Three strategic schema types matter most:

Article schema labels content type, author, publish date, and headline. This helps LLMs understand content authority and recency. Use it on blog posts, guides, and editorial content.

FAQPage schema explicitly marks question-answer pairs. Perfect for citation extraction because LLMs can pull exact Q&A segments. Implement on FAQ sections and Q&A-style content.

HowTo schema structures step-by-step instructions. Ideal for process-oriented content like tutorials and guides. Each step becomes a discrete entity LLMs can reference independently.

The sameAs property links entities to verified profiles. Connect your brand to LinkedIn, Crunchbase, Wikipedia, and official brand pages. This establishes entity authority and consistent identity across the web.

Consistency requirement: maintain uniform entity naming across all pages, metadata, and content hubs. If you reference“ Search Generative Experience” on one page and “Google SGE” on another, LLMs struggle to track connections reliably.

Sites implementing HowTo and FAQ schema—recipe pages, instructional guides—index faster and appear more frequently in AI-generated previews. Structured data isn’t just technical compliance. It’s a strategic framework providing measurable visibility and authority improvements.

Common Mistakes That Block AI Citations

Even well-intentioned optimization can fail if you make these citation-deficient mistakes:

- Blocking LLM crawlers: If robots.txt blocks GPTBot, PerplexityBot, or ClaudeBot, your content can’t reach LLM indices. This causes -100% visibility. Check your robots.txt file immediately.

- Keyword stuffing: Cramming keywords without context causes -8.3% citation impact. LLMs detect and penalize low-quality patterns, just like traditional search engines.

- Burying key facts: Placing critical answers deep in long paragraphs reduces parsing fidelity. Direct answers must appear in the first 150 words. If users have to scroll or search to find your main point, AI won’t cite you.

- Inconsistent entity naming: Alternating between “Google SGE” and “Search Generative Experience” confuses entity recognition. Maintain uniform terminology throughout all content.

- Missing schema markup: Content without structured data is harder for LLMs to interpret and categorize. Even basic Article schema improves citation likelihood.

- Lack of authority signals: Content without citations, statistics, or expert quotes appears less credible. Adding quotations increases citations by 41%—but only if you actually include them.

- Poor content structure: Walls of text without semantic breaks reduce extractability. If humans struggle to scan your content, LLMs definitely will.

Avoiding these mistakes is as important as implementing best practices. Sometimes the fastest path to improved citations is removing what’s blocking them—not adding more optimization layers.

Build for Citation, Not Just Ranking

The shift from ranking to citation changes content strategy fundamentally. You’re no longer optimizing solely for Google’s algorithm—you’re engineering for how AI models parse, understand, and attribute information.

Citation-worthiness requires structured strategy, not accidental optimization. Recap the core requirements:

- technical infrastructure (robots.txt allowing crawlers, llms.txt curation, schema markup implementation),

- content architecture (Structured Content Objects, clear entity naming, direct answer positioning),

- authority signals (quotations +41%, statistics +31%, E-E-A-T implementation),

- measurement,

- iteration (Citation Score tracking, visibility monitoring, continuous optimization).

Brands engineering citation-ready content—not just optimizing keywords—will dominate AI search visibility. Traditional SEO still matters for Google rankings, but GEO determines whether ChatGPT, Perplexity, Claude, and other AI platforms trust your content enough to cite it.

Start by assessing current citation-readiness using visibility tracking tools. Implement technical foundation first—without proper crawler access, nothing else matters. Layer optimization tactics systematically based on GEO research data. Measure inclusion across LLM platforms and iterate based on actual citation performance.

If your content isn’t structured to be cited by models, it’s invisible to the next generation of search. The question isn’t whether to optimize for AI citations—it’s whether you’ll do it before your competitors do.

FAQ

Does Google punish AI-generated content?

Google evaluates content based on E-E-A-T signals (Experience, Expertise, Authoritativeness, Trust), not generation method. AI-generated content that demonstrates expertise, includes original insights, and provides value is not penalized. Focus on citation-worthy attributes: structured data, authoritative sources, and clear entity relationships. Quality matters more than origin.

Which type of information does not usually require citation?

Common knowledge facts, general definitions, and widely accepted information traditionally don’t require citations. However, for AI citation optimization, adding authoritative source validation even for known facts increases parsing fidelity by 27.3% according to GEO research. When in doubt, cite—it strengthens trust signals.

What are good examples of citations in AI responses?

Effective citations include structured, attributed, data-rich statements like “According to HubSpot’s 2023 Marketing Report, 35% of executives prioritize conversion metrics” or entity-specific, verifiable, technically precise examples like “Stripe Docs implements modular API endpoints with cross-linked changelogs.” Both provide clear attribution and specific data LLMs can extract confidently.

How can I improve my citation rate in AI responses?

Implement the Citation Optimization Loop: ensure robots.txt allows LLM crawlers (GPTBot, PerplexityBot, ClaudeBot), add llms.txt to curate priority content, deploy schema markup (FAQPage, HowTo, Article), and structure content with quotations (+41% boost) and statistics (+31% boost). Measure performance with tools like VISIBLE™ Platform and iterate based on actual citation data.

What should I avoid when optimizing for AI citations?

Avoid keyword stuffing (-8.3% impact), using unique words without purpose (+6.2% only minimal benefit), burying key facts in long paragraphs, inconsistent entity naming, and blocking LLM crawlers in robots.txt. Focus on parsing fidelity over keyword density. Structure, authority, and clarity matter more than clever writing or keyword manipulation.

392

392  6 min

6 min

Share

Share

X

X

LinkedIn

LinkedIn