- How Generative AI Works: Training to Content Creation

- Foundation Models and Transformer Architecture

- Leading Generative AI Tools and Platforms

- Business Applications and Real-World Use Cases

- Training and Fine-Tuning Processes

- Risks and Limitations of Generative AI

- Adoption Trends and Economic Impact

- Getting Started with Generative AI

- Conclusion

- FAQs

Picture this: A marketing team that once took three weeks to produce a campaign now does it in three days. A developer who spent hours debugging code now ships features 55% faster. A customer service department handling 1,000 daily inquiries now manages 7,000 with the same headcount. This isn’t science fiction—it’s what’s happening right now with generative AI.

Generative AI is artificial intelligence technology that creates original content—text, images, code, video, and audio—using foundation models trained on massive datasets. Unlike traditional AI that classifies or predicts, generative AI produces new output autonomously through transformer-based architectures like ChatGPT, powered by deep learning models with billions of parameters that understand context and generate human-quality responses.

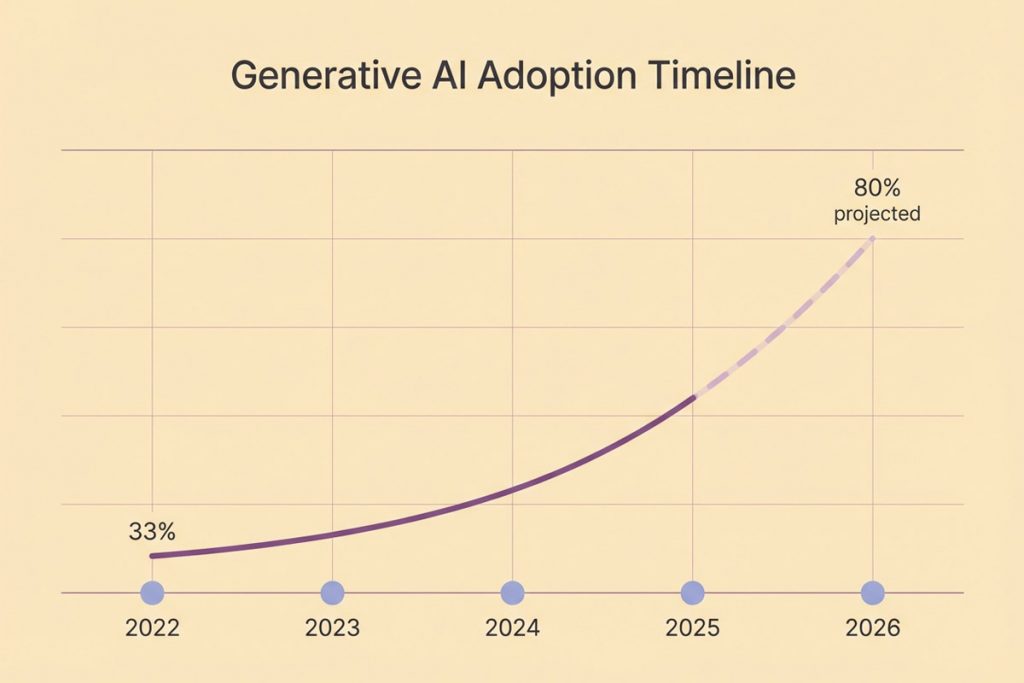

Here’s the twist: McKinsey estimates gen AI will add $4.4 trillion in annual economic value globally, while Gartner projects 80% of organizations will adopt it by 2026—up from just 33% today. When ChatGPT launched in November 2022, it reached 100 million users in two months, faster than any consumer application in history. That explosion signals something deeper: foundation models have unlocked AI’s creative potential, transforming how businesses approach content strategy, code development, and customer engagement.

How Generative AI Works: Training to Content Creation

Think of gen AI as learning through pattern recognition at massive scale, then applying those patterns to create something new. The process unfolds in three distinct phases that turn raw data into creative output.

Phase 1: Training the Foundation Model

Foundation models train on terabytes of data—GPT-3 consumed 45TB of text from books, websites, and articles. Through self-supervised learning, the model predicts what comes next: the next word in a sentence, the next pixel in an image, the next token in code. Each correct prediction strengthens neural pathways, creating 175 billion parameters for GPT-3—essentially 175 billion learned patterns about how language, images, or code work.

This training phase costs $2-5 million for major models, requiring months of computational processing across thousands of GPUs. The result? A foundation model that understands context, semantics, and structure across whatever domain it learned.

Phase 2: Fine-Tuning for Specialization

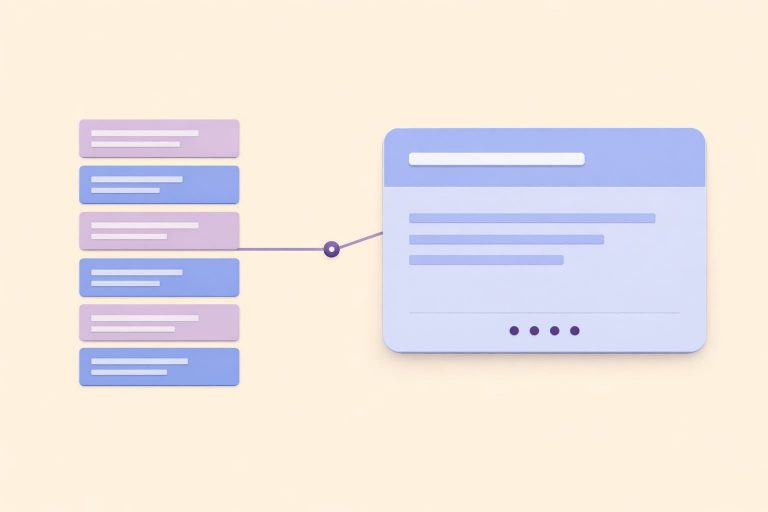

Raw foundation models lack focus. Fine-tuning customizes them for specific tasks—customer service chatbots, legal document generation, or medical diagnosis support. Two techniques enhance accuracy: RLHF (Reinforcement Learning from Human Feedback) trains models on human preferences, teaching them which responses users find helpful. RAG (Retrieval Augmented Generation) connects models to external knowledge bases, pulling in current information beyond their training data.

This specialization transforms a generalist into an expert. A generic model might generate text about law, but a fine-tuned legal AI understands precedent, jurisdiction-specific rules, and proper citation formats.

Phase 3: Generation

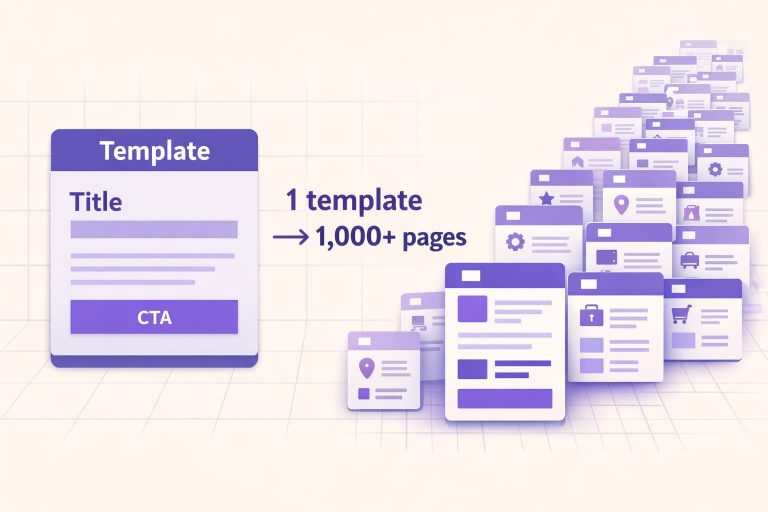

You provide a prompt. The model analyzes context, applies learned patterns, and generates new content—text, images, code—that didn’t exist before. Each output is unique, contextually appropriate, and created in seconds. The model isn’t retrieving stored answers; it’s synthesizing new responses based on billions of learned patterns.

Generative AI vs Traditional AI

The difference between AI and generative AI comes down to what they produce. Traditional AI analyzes and predicts—fraud detection systems classify transactions as legitimate or suspicious, recommendation engines predict which products you’ll buy, predictive models forecast sales trends. Generative AI creates original content that didn’t exist before: ChatGPT writes articles, DALL-E generates images, GitHub Copilot produces code.

Both share neural network foundations, but their training objectives diverge. Traditional AI minimizes prediction error—how accurately can it classify or forecast? Generative AI maximizes creative output quality—how realistic, relevant, and useful is the generated content?

Think of it as an evolutionary hierarchy: Machine Learning is the broad category encompassing all algorithms that learn from data. Deep Learning, a subset using multi-layered neural networks, enables more complex pattern recognition. Generative AI, built on deep learning architectures, represents the creative frontier where models don’t just recognize patterns but use them to generate entirely new outputs.

Foundation Models and Transformer Architecture

Foundation models are the engines powering generative AI—massive, versatile systems trained on terabytes of data that serve as the base for multiple applications. GPT-3 contains 175 billion parameters, while GPT-4 reaches an estimated 1.7 trillion parameters. Each parameter represents a learned pattern, a mathematical weight that determines how the model processes and generates information. Building these models costs millions—$2-5 million in computational resources alone.

The breakthrough enabling modern foundation models came in 2017 when Vaswani et al. at Google Brain introduced transformer architecture. Before transformers, AI processed text sequentially—read one word, then the next, like following a line of dominoes. Transformers changed everything through the attention mechanism, which processes entire sequences simultaneously. The model can “pay attention” to any word in a sentence regardless of position, understanding that “bank” means something different in “river bank” versus “savings bank” by analyzing surrounding context in parallel.

Here’s how it works: data converts to embeddings—mathematical representations that capture meaning. Words with similar meanings cluster together in this numerical space. The transformer’s encoder-decoder architecture then processes these embeddings through multiple layers, each refining understanding and generation capabilities.

The landscape is democratizing. Meta’s LLaMA-2 and other open-source foundation models reduce barriers to entry, letting smaller organizations build specialized applications without training models from scratch. You can fine-tune existing foundations for specific industries—legal, medical, financial—at a fraction of the original training cost.

Foundation Model Architecture Comparison

| Architecture | Year Introduced | Core Strengths | Typical Applications | Output Quality | Training Complexity |

|---|---|---|---|---|---|

| VAE (Variational Autoencoder) | 2013 | Smooth latent space interpolation, generates diverse outputs, good for data compression | Image generation, anomaly detection, data denoising, recommendation systems | Good for variations, can be blurry | Moderate - easier to train than GANs |

| GAN (Generative Adversarial Network) | 2014 | High-quality realistic images, sharp details, excellent for visual content | Photo-realistic image generation, style transfer, video synthesis, deepfakes | Excellent visual quality, very realistic | High - requires careful balancing of generator/discriminator |

| Transformer | 2017 | Parallel processing, attention mechanism, scales with data, handles long-range dependencies | Language models (GPT, ChatGPT), translation, text generation, code, multimodal AI | Superior for text and sequential data, context-aware | Very high - requires massive compute but highly scalable |

Leading Generative AI Tools and Platforms

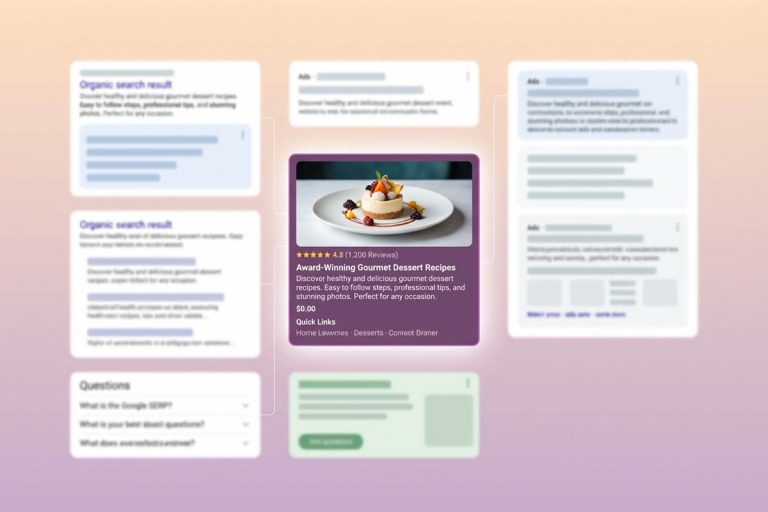

Yes, ChatGPT is generative AI—and currently the most famous example. Released by OpenAI in November 2022, ChatGPT runs on GPT-3.5 and GPT-4 foundation models, reaching 100 million users in just two months. It handles text generation, coding assistance, analysis, and conversational interactions across dozens of languages.

But ChatGPT competes in a rapidly expanding field.

- Claude, developed by Anthropic, specializes in longer context windows (up to 200,000 tokens versus ChatGPT’s 128,000) and emphasizes safety through constitutional AI training.

- Google Gemini offers multimodal capabilities—processing text, images, and code simultaneously—with tight integration into Google Workspace for enterprise users.

- Image generation tools demonstrate generative AI’s visual creativity. DALL-E (OpenAI) excels at precise prompt interpretation, Stable Diffusion (Stability AI) provides open-source flexibility, and Midjourney focuses on artistic, stylized outputs favored by creative professionals.

- Code generation accelerates software development. GitHub Copilot suggests entire functions based on comments, while Tabnine offers privacy-focused alternatives for enterprises concerned about proprietary code exposure.

| Tool | Content Type | Base Model | Starting Cost | Enterprise Ready |

|---|---|---|---|---|

| ChatGPT | Text, Code | GPT-4 | $20/month | Yes (Team/Enterprise) |

| Claude | Text, Code | Claude 3 | $20/month | Yes (API available) |

| Gemini | Text, Images, Code | Gemini Pro | $20/month | Yes (Workspace integration) |

| DALL-E | Images | DALL-E 3 | Pay-per-image | Limited |

| Stable Diffusion | Images | SD XL | Free (open-source) | Self-hosted |

| GitHub Copilot | Code | GPT-4 | $10/month | Yes |

The choice depends on your use case: ChatGPT for conversational breadth, Claude for nuanced long-form content, Gemini for multimodal applications, and specialized tools for images or code.

Business Applications and Real-World Use Cases

The main goal of generative AI is productivity enhancement through automation—and businesses are capturing that value across every department. Let’s be honest: this isn’t about replacing humans; it’s about amplifying what they can accomplish.

Content Creation transforms marketing workflows fundamentally. Generative AI automates blog posts, social media campaigns, and ad copy, reducing production time by 40-60% while maintaining consistent brand voice and optimizing for SEO. Marketing teams use AI to generate dozens of headline variations for A/B testing, create personalized email sequences at scale, and adapt content across channels—work that previously required entire teams. Content optimization happens in real-time, with AI-powered SEO tools analyzing performance and suggesting improvements.

Code Generation accelerates software development measurably. GitHub reports developers using Copilot code 55% faster, with reduced debugging time as the AI suggests tested patterns and catches potential errors during writing. Developers describe it as pair programming with an infinitely patient partner who’s read millions of code repositories. The AI handles boilerplate code while humans focus on architecture and business logic.

Customer Service reaches 24/7 availability through AI-powered chatbots handling 70-80% of routine queries instantly. These systems resolve password resets, track orders, answer product questions, and escalate complex issues to human agents—all while learning from each interaction to improve response quality. Customer service capacity increases 300% without proportional headcount growth.

Design and Creative applications generate product mockups, marketing variations, and A/B testing materials in minutes rather than days. Designers iterate faster, testing more concepts before committing resources to production.

Document Automation handles contracts, invoices, and reports, extracting data from various sources and generating formatted outputs that previously required manual review and assembly.

Data Analysis synthesizes insights from datasets too large for manual review, identifying patterns and generating actionable recommendations. Financial analysts use gen AI to summarize earnings reports, legal teams review contracts for risk factors, and researchers analyze study data for trends.

McKinsey’s breakdown of that $4.4 trillion economic impact shows where value concentrates: customer operations ($1 trillion through automated support and personalization), marketing and sales ($1.2 trillion via content generation and targeting), and software development ($850 billion from code automation and testing). Research and development captures $450 billion through accelerated experimentation and simulation. Early adopters report ROI within 6-12 months as AI augments human capabilities rather than replacing them.

Training and Fine-Tuning Processes

Foundation models start generic—trained on everything from medical journals to romance novels. Fine-tuning customizes these models for specific applications, adding the specialized knowledge that transforms a generalist into an expert.

The process takes a pre-trained foundation model and trains it further on a targeted dataset. ChatGPT’s base model learned conversational patterns through fine-tuning on dialogue examples. A legal AI trains on case law, contracts, and regulatory documents. A medical diagnostic assistant learns from clinical studies and patient records. This specialization happens through self-supervised learning—the model learns from unlabeled data by predicting masked elements, dramatically reducing the need for expensive human-labeled datasets.

Here’s what makes this powerful: you’re not starting from scratch. The foundation model already understands language structure, reasoning patterns, and general knowledge. Fine-tuning adds domain expertise—legal terminology, medical protocols, financial regulations—without requiring the massive computational resources of initial training.

RLHF (Reinforcement Learning from Human Feedback) improves model accuracy by having human evaluators rate outputs. The model learns which responses users find helpful, accurate, and appropriate. This feedback loop explains why ChatGPT evolved rapidly after launch—millions of user interactions taught it preferences across contexts. RLHF is particularly effective for subjective qualities like helpfulness, tone, and style that are difficult to capture in traditional training data.

Why fine-tuning matters: generic models lack domain expertise that specialized applications demand. A general-purpose model might generate legally plausible text, but a properly fine-tuned legal AI understands precedent, jurisdiction-specific rules, and proper citation formats. The difference becomes critical when accuracy has real-world consequences.

The economics make specialization accessible. Training a foundation model costs $2-5 million. Fine-tuning that model for your industry? $10,000-$100,000—a 50x cost reduction that puts enterprise-grade AI within reach of mid-sized organizations.

RAG and Prompt Engineering for Accuracy

Two techniques dramatically improve generative AI accuracy without expensive retraining.

RAG (Retrieval Augmented Generation) connects foundation models to external knowledge bases. Before generating a response, the model retrieves relevant, current information from databases, documentation, or web sources. This solves two critical problems: access to data beyond the model’s training cutoff date, and reduced hallucinations through grounding responses in verified sources. A customer service AI using RAG pulls product specifications from live inventory systems rather than relying on potentially outdated training data.

Prompt Engineering is the systematic craft of designing inputs for optimal outputs. Effective techniques include clear, specific instructions (“Write a 500-word blog post about solar panels for homeowners”), examples showing desired format, role assignment (“You are a financial advisor helping retirees”), and requesting step-by-step reasoning to improve logical consistency. Proper prompting can eliminate the need for expensive fine-tuning—a well-crafted prompt achieves specialist-level outputs from general models.

Guardrails implement rules preventing harmful, biased, or off-topic responses. These filters catch inappropriate content before it reaches users, maintaining brand safety and regulatory compliance. Organizations layer multiple guardrails: input validation, content filtering, output verification, and human oversight for high-stakes decisions.

Risks and Limitations of Generative AI

Generative AI’s power creates proportional risks that organizations must address through systematic mitigation strategies.

Hallucinations—plausible but inaccurate outputs—occur in 5-15% of responses depending on guardrails. A New York lawyer submitted legal briefs containing ChatGPT-generated case citations that didn’t exist, resulting in sanctions. For legal, medical, or financial applications, this risk level demands human verification. Hallucinations threaten output accuracy fundamentally because they’re convincing enough to bypass casual review.

AI Bias originates from training data that reflects societal prejudices around gender, race, and culture. Amazon abandoned an AI recruiting tool that discriminated against women because it learned from historical hiring patterns that favored men. Biased outputs appear in hiring decisions, lending approvals, and content recommendations—amplifying discrimination at scale. Mitigation requires diverse training data, continuous bias testing, and regular model evaluation.

Deepfakes enabled by generative AI create convincing fake videos, audio, and images used for misinformation, fraud, and reputation damage. Detection methods improve constantly but lag behind generation capabilities. A finance executive lost $25 million after criminals used AI-generated voice to impersonate his CEO.

Intellectual Property concerns intensify as models train on copyrighted content. Who owns AI-generated outputs? Can you copyright AI-created art? Courts are addressing these questions through ongoing litigation, but legal clarity remains years away.

Data Privacy vulnerabilities emerge when models memorize and potentially leak training data. Security threats include prompt injection attacks (manipulating AI through crafted inputs), jailbreaking (bypassing safety restrictions), and adversarial inputs designed to produce harmful outputs.

Accuracy Limitations extend beyond hallucinations. Models struggle with complex math, make reasoning errors, and lack knowledge of events after their training cutoff dates. Environmental impact matters too—training large models consumes massive energy equivalent to hundreds of homes’ annual electricity use.

Practical Mitigation Strategies:

Human-in-the-loop systems validate AI outputs before deployment. Continuous evaluation catches accuracy drift. Guardrails prevent harmful responses. Diverse training data reduces bias. Transparency about AI involvement builds trust. Specialized domain models increase accuracy for critical applications. The key principle: augment human judgment, don’t replace it.

Adoption Trends and Economic Impact

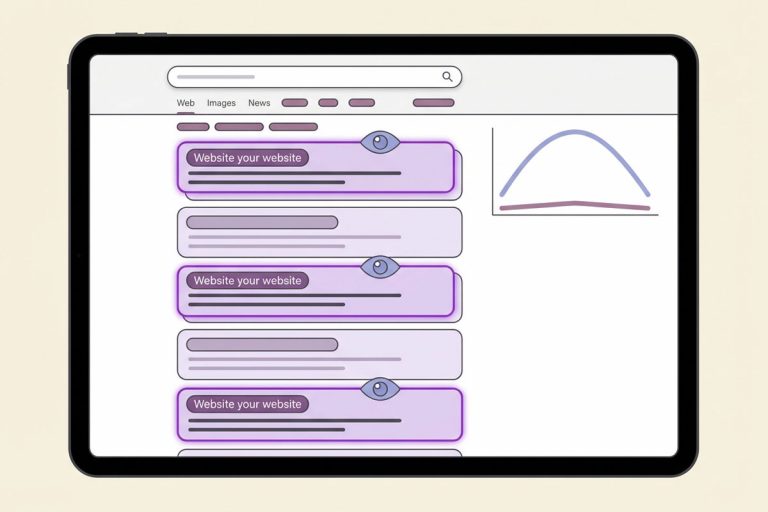

Adoption is accelerating faster than any previous enterprise technology. Currently, 33% of organizations use generative AI according to McKinsey’s 2024 research. Gartner projects that figure will hit 80% by 2026—a transformation happening in just four years from ChatGPT’s launch.

The economic stakes justify the urgency. Generative AI will add $4.4 trillion in annual value globally across industries: customer operations ($1 trillion through chatbots and automated support), marketing and sales ($1.2 trillion via content generation and personalization), software development ($850 billion from code automation), and R&D ($450 billion through accelerated research and simulation).

Productivity gains appear immediately. Content creation runs 40-60% faster. Code generation with tools like GitHub Copilot speeds development by 55%. Customer service capacity increases 300% as AI handles routine queries, freeing human agents for complex issues. Early adopters report positive ROI within 6-12 months—not years—as AI augments existing workflows without requiring complete system overhauls.

But adoption drives business transformation beyond efficiency. Companies are redefining workflows, restructuring teams, and evolving job roles. The shift isn’t automating tasks—it’s reimagining what’s possible. Marketing teams produce 10x more content variations for testing. Developers focus on architecture while AI handles boilerplate code. Customer service becomes proactive rather than reactive.

ChatGPT’s cultural impact—100 million users in two months, the fastest-growing consumer application ever—signals a permanent shift. Organizations that delay adoption risk competitive disadvantage as AI literacy becomes a baseline skill.

Getting Started with Generative AI

For Individuals: Start with free tools—ChatGPT, Claude, or Google Gemini—and experiment. Learn prompt engineering basics through trial: be specific (“Write a 300-word product description for organic coffee targeting health-conscious millennials”), provide examples of desired output, and refine iteratively based on results. The investment is time, not money, and the skills transfer across all AI tools.

For Businesses: Identify high-value use cases where generative AI solves actual problems. Content creation, customer service, and code generation offer measurable ROI. Run pilot programs with small teams, measure results rigorously, then scale successful applications. Avoid the “AI for everything” trap—focus beats breadth.

Integration considerations matter from day one. Evaluate API access for custom applications, ensure data security protocols meet compliance requirements, and plan for employee training on prompt engineering, understanding limitations, and verification procedures.

Tool selection depends on content type needs, budget constraints, and enterprise features like SSO and data privacy guarantees. The relationship between successful implementation and human-AI hybrid approaches is direct—automation handles repetitive tasks while humans provide strategic thinking and quality oversight.

Organizations seeking professional implementation can explore specialized services that handle the technical complexity of AI integration. Lead Craft’s generative engine optimization services help businesses navigate content restructuring, AI citation tracking, and performance measurement, with clients typically seeing ROI within 3-8 months and qualified lead increases of 27-40%. Early adopters gain competitive positioning before market saturation makes AI literacy standard rather than differentiating.

Conclusion

Generative AI creates original content through foundation models trained on massive datasets—a fundamental shift from AI that analyzes to AI that generates. The numbers validate this transformation: $4.4 trillion in annual economic value, adoption climbing from 33% today to 80% by 2026, and productivity gains of 40-60% across content creation, code generation, and customer service.

But opportunity demands responsibility. Foundation models power unprecedented creative capability, yet hallucinations threaten accuracy in 5-15% of outputs. The solution isn’t avoiding AI—it’s implementing human oversight, guardrails, and continuous evaluation. Organizations should start with pilot programs testing specific use cases, measure results rigorously against baselines, and scale strategically with proper risk mitigation.

The technology evolves rapidly—what’s cutting-edge today becomes standard tomorrow. Early adopters gain competitive advantages while others struggle to catch up. McKinsey, Gartner, and OpenAI research consistently validates gen AI’s transformative impact across industries, from marketing automation to scientific research acceleration.

The question isn’t whether to adopt generative AI, but how quickly you can implement with the right balance of automation and human judgment. The tools exist. The ROI is proven. The competitive advantage awaits those who act strategically.

FAQs

What AI is not generative?

Traditional AI focuses on classification and prediction, not content creation. Examples include recommendation systems (Netflix suggesting shows), fraud detection algorithms (flagging suspicious transactions), and predictive maintenance models (forecasting equipment failures). These systems analyze patterns but don’t generate original output—they categorize, score, or predict based on existing data.

What is the difference between OpenAI and generative AI?

OpenAI is a research organization that develops generative AI products like ChatGPT and DALL-E. Generative AI is the broader technology category that OpenAI and competitors like Anthropic (Claude) and Google (Gemini) use. Think of it this way: generative AI is the technology, OpenAI is one company building products with it.

Why are people against generative AI?

Concerns include job displacement as automation replaces human tasks, biased outputs from training data reflecting societal prejudices around gender and race, deepfakes enabling misinformation campaigns, copyright issues over training on protected content, and hallucinations producing convincing but false information. Each risk requires human verification and continuous monitoring.

What are the 7 main types of AI?

AI categories by capability include reactive machines (chess AI responding to current board state), limited memory (self-driving cars using recent sensor data), theory of mind (emotional AI, still developing), and self-aware (hypothetical future AI). By scope: narrow AI handles specific tasks, general AI would match human-level reasoning, and superintelligence would exceed human capabilities across all domains.

Can AI be 100% trusted?

No. Generative AI produces hallucinations—plausible but inaccurate outputs—in 5-15% of cases depending on guardrails and domain complexity. Organizations must implement human oversight, content verification, and continuous evaluation. Critical decisions in legal, medical, or financial contexts always require human verification before action.

What is generative AI for dummies?

Think of generative AI as super-smart autocomplete that learned patterns from billions of examples. You give it a prompt like “Write a product description,” and it creates new text by predicting what should come next based on everything it learned during training. The same principle applies to images, code, and audio.

When was generative AI invented?

Foundational work began with VAEs (Variational Autoencoders) in 2013 and GANs (Generative Adversarial Networks) in 2014, but the transformer architecture introduced by Vaswani et al. in 2017 enabled modern generative AI’s breakthrough capabilities. ChatGPT’s November 2022 launch brought mainstream awareness and rapid adoption.

316

316  28 min

28 min

Share

Share

X

X

LinkedIn

LinkedIn